For my final project in Poetics of Space, I made a piece called monumoment. A portmentau of monument and moment, this piece creates a monument dedicated to the audience, memorializing the moment the audience engages with the work. I consider it a kind of sculpture and sound installation inside of a virtual reality space.

The technique involves recording audio from the user, using the Oculus Rift’s built in microphone. When a button is held down, the user can record themselves speaking. Upon release, that audio clip is saved in the program. I developed a system inside of Unity to do the following:

- Take the audio clip, and distribute it to every sculpture that is made (every sculpture consists of one or more boxes)

- For every box inside of the sculpture, the audio is sliced into that many pieces. A sculpture with four boxes will slice the audio into four pieces. 8 boxes, eight slices. 100 boxes, 100 slices. etc.

- Each slice of audio is assigned and attached to each box and loops infinitely. Each box’s audio settings are configured as spatialized audio, so that the farther away the box is, the more quiet it becomes.

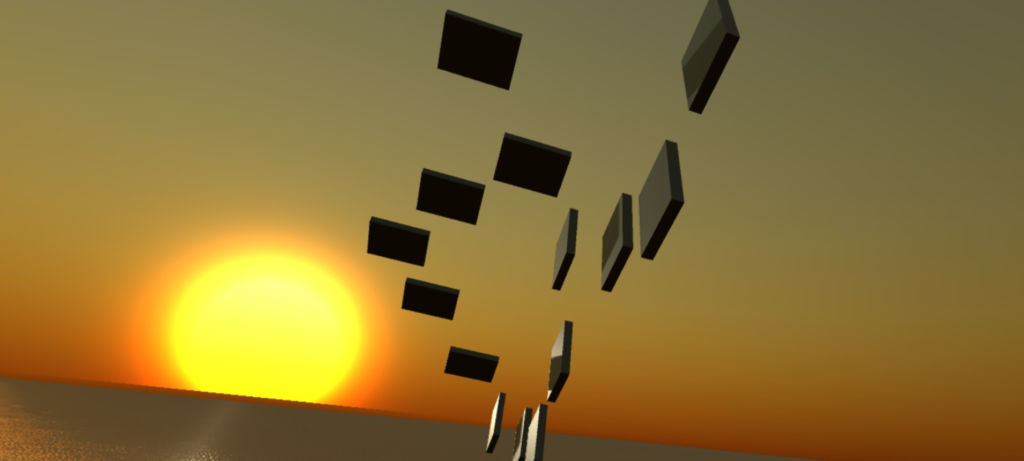

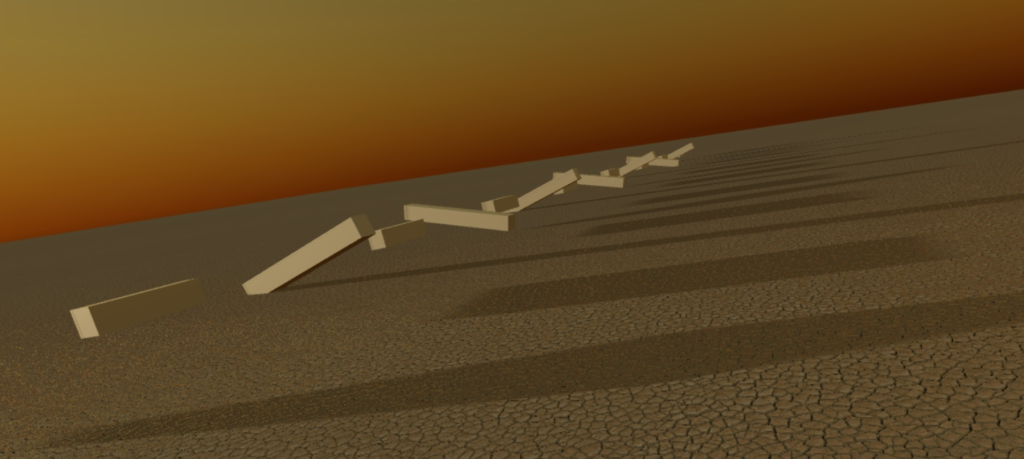

This general concept was approached in my midterm, however in that instance, the slicing of the audio was done manually beforehand. The content of that piece, Green Apples, was an audio interview with my colleague Akmyrat Tuyliyev. The audio of the interview is played without any spatialization while variations on this sculpture concept were presented to the viewer. The last sculpture is mobile, with the slices of audio approaching the audience in sequence.

(this approach sequence starts at 3:41)

While the interview was conducted before constructing the scene, and then the slicing technique manually implemented afterwards, I received user feedback that indicated people had the feeling that this interview was being conducted “inside” of the piece somehow. As if I had made the VR environment, and somehow had the conversation live, with all effects and scene changes being dynamic. This was not actually the case.

While being able to automate my slicing technique was always a goal, and being able to use the microphone was a good way to test it as I coded it, this feedback solidified my artistic impulses towards having conversations with people.

Green Apples was partially about putting the interviewee’s voice “inside” of a sculpture. Akmyrat speaks, but the sculpture “speaks” as well, emitting his voice. For my final, I wanted to make a piece that could take any audience and give them a sculpture as well.

The majority of the work on this project was more technical/programming in nature rather than asset building and 3d modeling. This semester was my first time actually using Unity in a real and deep manner, so there was a lot to learn. Without cataloging all of my trials and tribulations, I will say that I re-appreciate “simple” experiences in the digital creative space.

When I say things like, “hold down a button and record audio,” there is much time that went into making that work in an intuitive way. Unity does not let you simply record an Audio Clip that lasts for as long as a button is pressed. You have to determine how long you want to record, and that number is fixed. Then you have to determine how long the button was pressed (in audio samples, not seconds), create a blank Audio Clip object of that length, copy the information from the first Audio Clip into the new smaller one (indicating at what point to start, and the extraneous audio gets cut), assign the new smaller one to the Audio Source, then destroy the old one.

An early iteration worked with simpler programming, yet would not work with multiple sound sculptures existing in the same scene. This massively changed the way copying of clips worked, and how redistributing and re-slicing needed to be done every time the record button is pressed.

So, with all this in mind: simple does not mean easy.

There were other ideas I had considered adding in terms of manipulating the sculptures. I researched rope or swing-like mechanics for the sculptures (it’s own time sink). I also had hopes for more tangible “grabbing and turning” type UX during a free play phase at the end of the experience.

While there are some of these aspects I would like to explore further, some things seemed like a bit of a distraction from the main idea I was trying to communicate. At a certain point, I moved on and dedicated time to the voice over narration.

There are two other drafts of the voice over, and all three have a good amount of outtakes and alternate versions of lines. The final sonic aesthetic is *almost* AMSR style delivery and quality. Perhaps NPR level. I wanted something very close to the microphone, very quiet and calm. This audio is *not* spatialized. Bass is boosted, adding a bit of warmth. With these qualities, I hope to make it feel like I am inside the audience’s head.

And the voice over is me directly communicating to the viewer. First, I explain what is going on: The audience is in a VR space, they can record. Recordings can be put inside of shapes. Recordings can be split up and put into multiple shapes. Shapes can move. When they move in certain ways, we have a visualization of tiny moments of audio. If the user is looking at 16 blocks, and then 15 disappear, they can recognize and appreciate that they are looking at 1/16 of a second or two of audio.

The message: small snippet they had literally been listening to the whole time, but not specifically appreciating.

This is where I attempt to draw a connection to the audience with further narration. We can examine, and perhaps appreciate the beauty and strangeness of this tiny fraction in time. This is a moment. A moment given by their voice, to me the artist, in order to make the piece function.

The moment of engagement from audience to artist is memorialized and presented to the audience as thanks.

If the button has been pressed, audio is in the piece, which means that the audience has given something of themselves (however small). This sentiment is important to me. On a larger scale, appreciation of moments and prompting meditation in ourselves and others is valuable. But even more specifically, I believe that the affordances of VR can make users very vulnerable. I am taking people’s voices, their names, and using it. They are strapping something onto their eyes, putting headphones on their head, all while knowing that I am standing right next to them. I imagine VR as people dipping their heads into other people’s art.

That is vulnerable. And while virtual reality has enough awe factor to let people put these concerns aside, I want to re-appreciate this vulnerability. Imagine my art piece was to ask people to put on a blind fold, ear muffs, put their head in a box, I would be recording them speak… and oh, by the way I’ll just be here standing next to you watching what you do. You know, just in case something goes wrong. In you go!

That would be asking a lot of the audience. This is something to remind ourselves of when we make VR experiences, and I thought it could be good fodder for a more uplifting consideration: thank you for even putting the headset on.

So with all this in mind, I consider this piece to be about the moment of engagement with art. I wondered if I was being a bit too tender, sappy, or “twee” on the messaging, but I really do feel this way. I very much appreciate that people engage with the things I make. I never want to take it for granted, I am thankful, and I consider it a special moment.