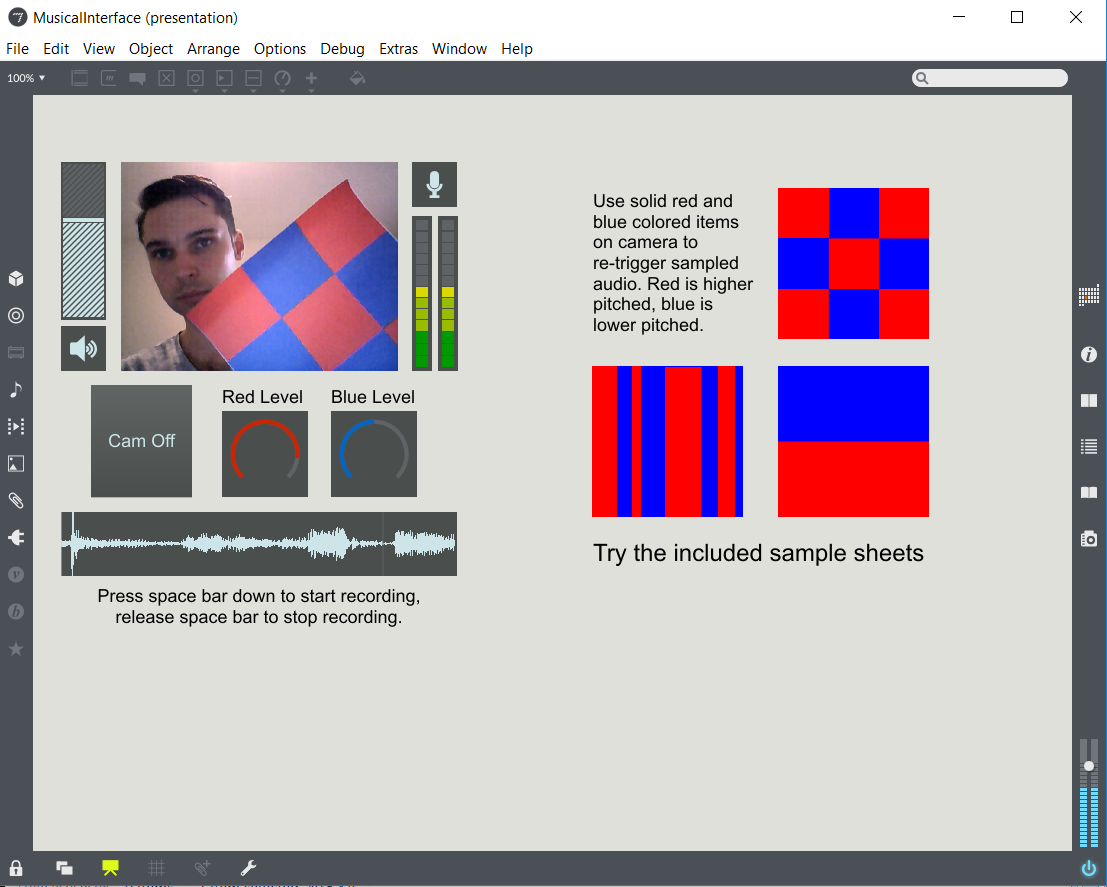

For the IDS final, I’m going to be combining with my Physical Computation final to build an interface for my Mic Cuff Controller. When searching around the ACM Digital Library site for articles, I found some interesting similar takes on what I’m trying to physically build.

The E-Mic seems to be in a similar realm. Incidentally, one of the prototype proposals on page 5 is the closest thing I’ve found to what I’ve been physically trying to implement (fig 22):

http://dl.acm.org/citation.cfm?id=1085743

And off the site, a prototype called “Project Tahoe”:

http://vhosts.eecs.umich.edu/nime2012/Proceedings/papers/202_Final_Manuscript.pdf

Though, I believe one of the more valuable discoveries in my ACM DL searches was discovered when I started broadening my search terms. I’m starting to commit more to the physical shape of my device, and I know that at least for now I want to stay somewhat focused on musical applications for it. I want an engaging, fun user testing scenario and I think musical activity will bring that about.

However, because I want to focus on a more casual user for the time being, I need to be careful about the software that is being used to demonstrate what the Mic Cuff Controller can do. With a certain amount of functional prototyping done, I am starting to focus on making user friendly software that allows for customization.

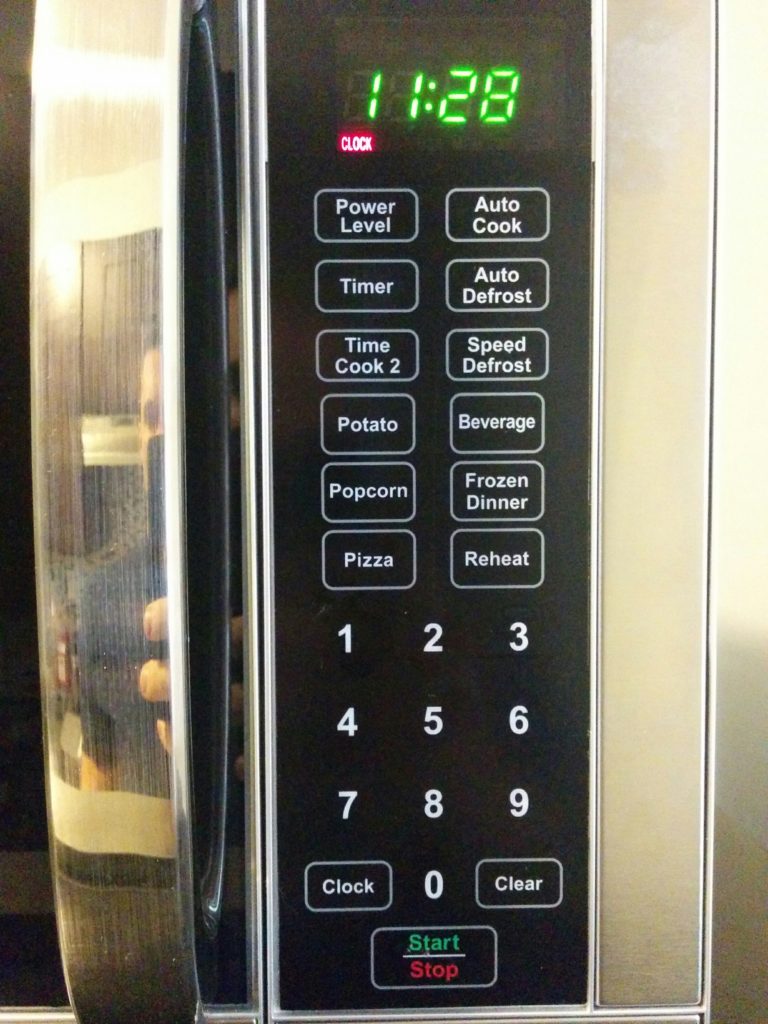

Being able to let users who may not be musically inclined play with a musical device offers certain design challenges. We want the users to be able to make changes to the state of playback, and be able to clearly implement decisions. However, an overly technical UI could be overwhelming, and may cause users to disengage. Though in trying to course correct for that, you could wind up taking away too many options and wind up with functionality that is too limited. This could also lead to users disengaging if they can’t do much with the program. My IDS final will be focusing on how to strike this balance.

I found a really interesting paper related to these ideas:

User Customization of a Word Processor

http://dl.acm.org/citation.cfm?id=238541&CFID=870155206&CFTOKEN=24252391

This is a 1996 paper from Stanley R. Page, Todd J. Johnsgard, Uhl Albert, and C. Dennis Allen about “identifying the customization changes users typically make to their word processors.” The main takeaways are that many people customize their software when given the chance, and that the researchers didn’t expect these findings. “A surprising 92% of the participants in this study did some form of customization of the software.” Additionally, the only predictive indicator of a person to customize was how often they used the program. Five categories of customization activities were identified: 1) customizing functionality, 2) customizing access to interface tools, 3) customizing access to functionality, 4) customizing visual appearance, and 5) setting general preferences. (“Findings”, p342-343)

I could go on with everything that has inspired me about this paper, but then I would just wind up re-typing the entire paper all out on this blog. Even though this research was done 20 years ago, I think this paper is a great reminder of fundamental UI principles and customization best practices. I’ve been meditating on this research and thinking of the best ways to heed it’s advice or push the ideas forward. One lead I’ve been pursuing pertains to the last paragraph:

“Finally, studying the patterns of users’ customization should help us move our adaptable systems to self adapting ones; systems that will accurately anticipate the customers’ work and respond by providing the appropriate tools and services. To this end we need to continue to study when, why, and how users tailor software to accomplish their work.” (p345)

A concept to integrate the desire for more study would be to integrate the study into the interface itself. A program that could analyze its own usage could be able to change its interface based off of that data. Or, more precisely, prompt the user with an opportunity to change the interface themselves. A simple example would be to track the amount of times the user has opened the program. If after, say, 50 times using the program, the user has still not entered an “options” menu to customize the program, then upon the 51st time opening the program prompts the user with information about the options menu and what it can do for them.

These ideas became a little more tangential from what I was originally trying to achieve with my project, but I am going to see if I can integrate them into the work. The danger would be basically re-creating Microsoft’s much hated “Clippy” word processing assistant feature. But I think there can be something between that and a complete lack of communicated information about a given interface. Given the prevalence of customization among all users, and the knowledge that more frequent users are more likely to customize, I think that some form of this approach could be interesting to try.

Even though if that particular experiment is more appropriate for another project, the paper has been a great source of research. It is good to know that users *will* customize, why they customize, and how they customize.